PUTTING OUR ROBOTS ON THE MAP

The calibration, localization, and mapping process that helps our fleet find its way.

To navigate safely, a robot needs to accurately perceive and interpret its environment.

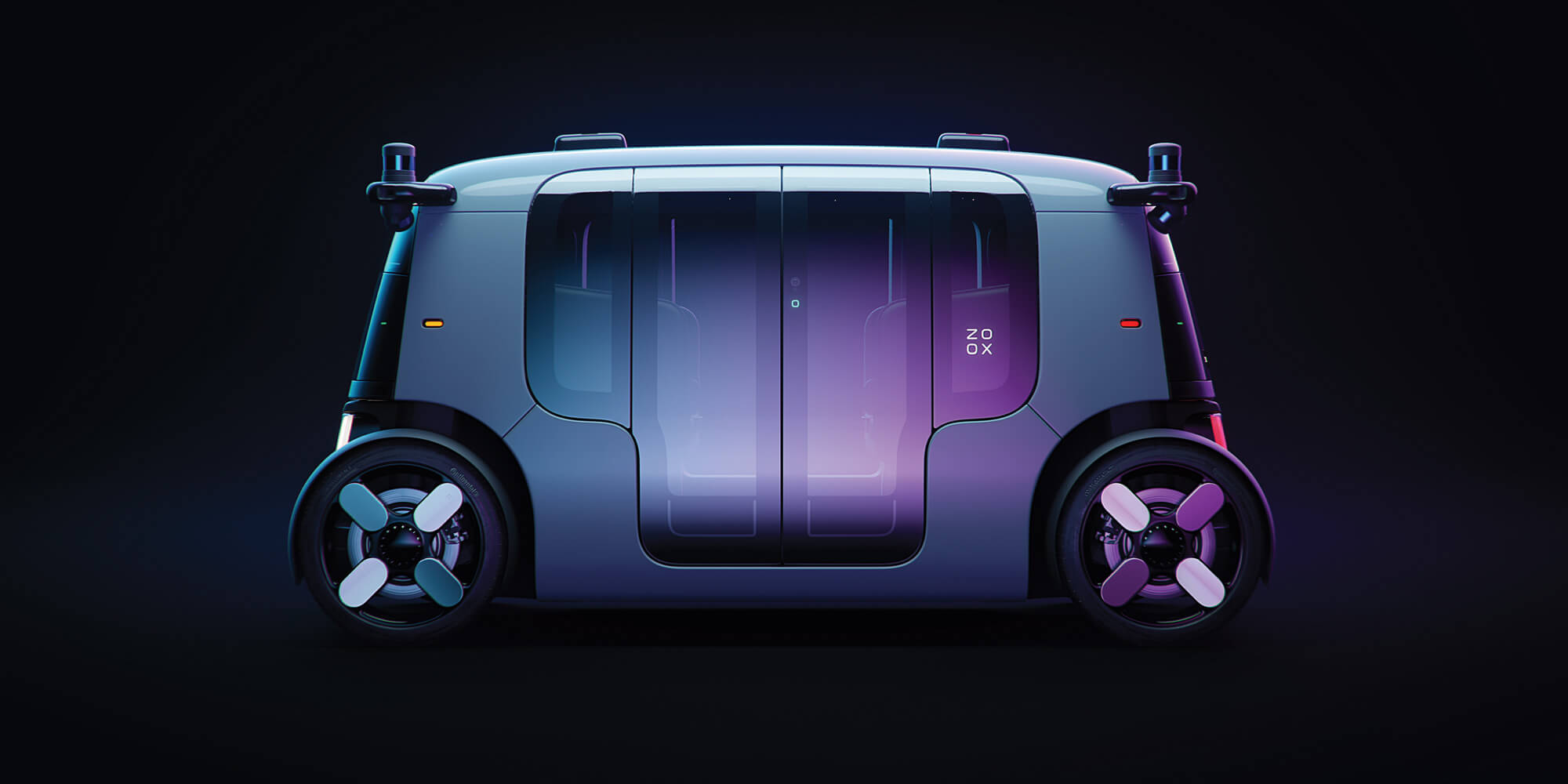

Humans do this with a combination of sensors (like our eyes and ears) and learned information (like our route to work or our knowledge of how traffic crossings work). The Zoox vehicle is similar, combining both real-time sensor data and detailed maps to move through its environment.

But first, we need to gather this data and feed it into the vehicle in a format that it can understand. This autonomous vehicle mapping process is led by two teams at Zoox: CLAMS (Calibration, Localization, and Mapping, Simultaneously) and ZRN (Zoox Road Network).

Together, they enable Zoox vehicles to know exactly where they are and where they’re going.

Calibration: first things first

In order to have a clear picture of the world, our vehicle first needs to know where its sensors are. That’s calibration.

Zoox vehicles use overlapping data from multiple sensors to calculate the exact position of each one. This is a continual, iterative process: our vehicle checks and re-checks the location of its sensors as it drives, ensuring these measurements are always accurate.

Calibration is the process of understanding sensor locations.

Sensor calibration typically involves showing a robot specific calibration targets—similar to an eye test. But this isn’t practical for a vehicle that drives all over the city. Instead, Zoox vehicles use features of their natural environment to calibrate its sensors. This is the secret to creating an adaptable and reliable calibration system.

Mapping: a detailed picture

Just like other road users, Zoox vehicles need a map to follow. And every map starts with data.

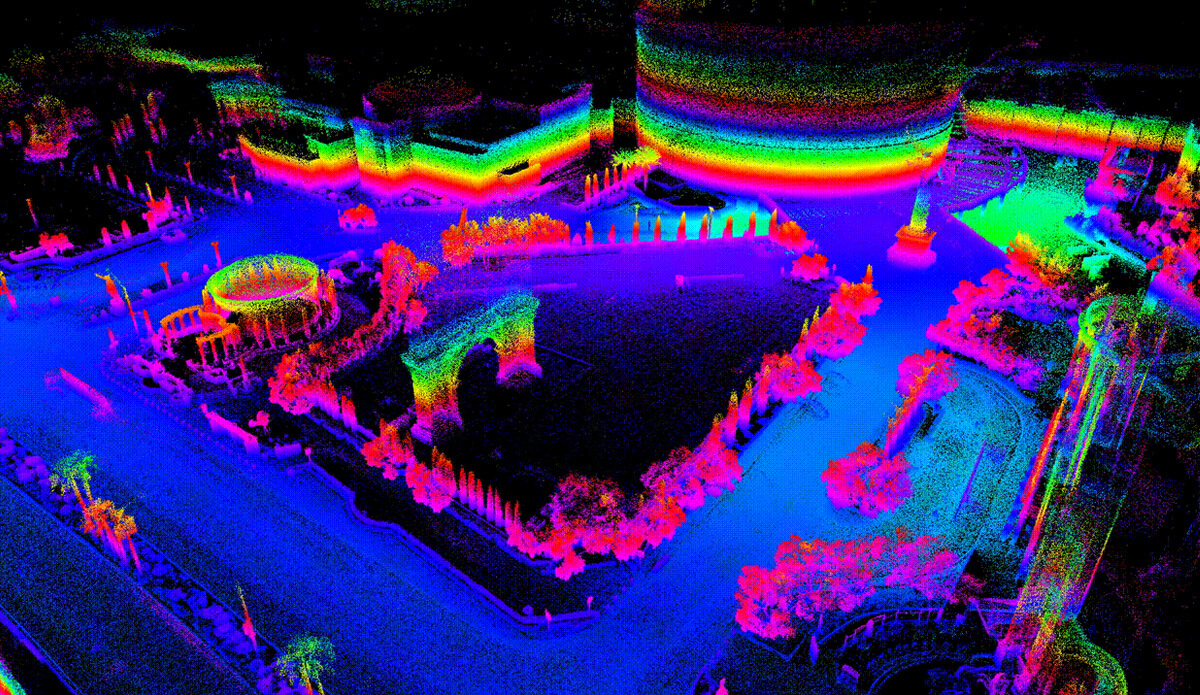

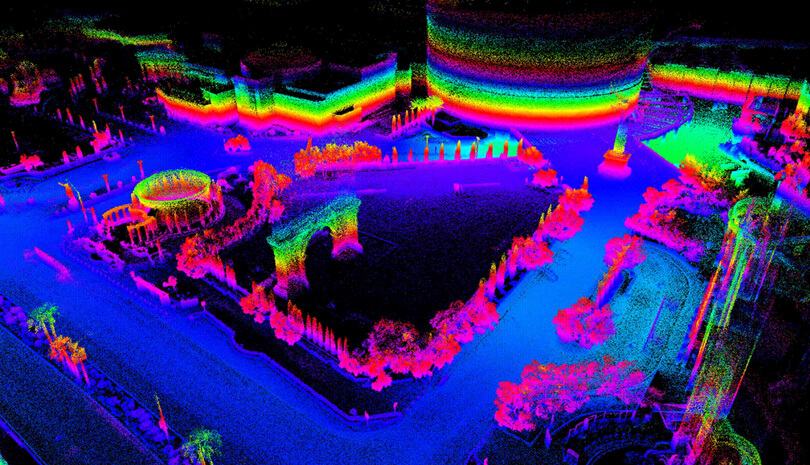

We gather our data first-hand, driving around in our Toyota Highlanders, which are outfitted with our full sensor architecture. After this raw data has been collected, the CLAMS team transforms it into a high definition 3D map. They use tools to automate and streamline the process, including AI that determines which objects are temporary and moveable (like cars or pedestrians) and which are permanent (like buildings or medians).

"Because our sensor architecture is the same on our L3 test vehicles and our purpose-built L5 vehicles, we can use any vehicle in the fleet to update our maps.” TAYLOR ARNICAR, STAFF TECHNICAL PROGRAM MANAGER

This map is then given to the ZRN team, who build the road network on top of it with the help of our automated tools. The ZRN encodes crucial information, like where traffic lights and stop signs are, what the speed limit is in a given area, or if there’s a bike lane or keep-clear zone.

These two maps are combined and automatically downloaded onto our vehicles. With the full environmental layout available to them, our vehicles can navigate from A to B knowing what they should expect to see around each and every corner.

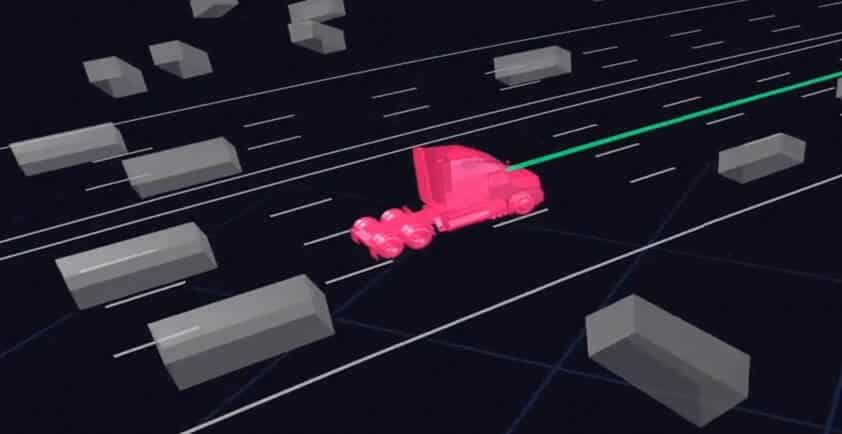

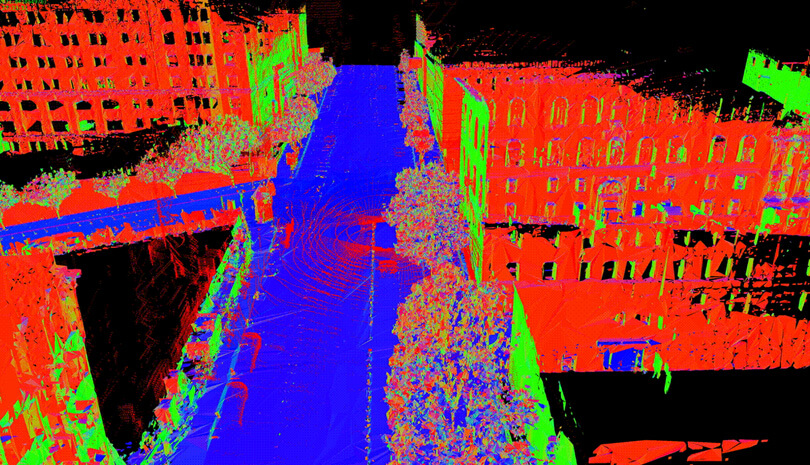

A raw lidar point cloud of Caesars Palace in Las Vegas, before it’s turned into an efficient mesh representation for the 3D map.

Localization: where am I?

You need to know where you are before you can plan how to get to your destination. For our vehicle, this means precisely and reliably determining its location by matching real-time sensor data to its map.

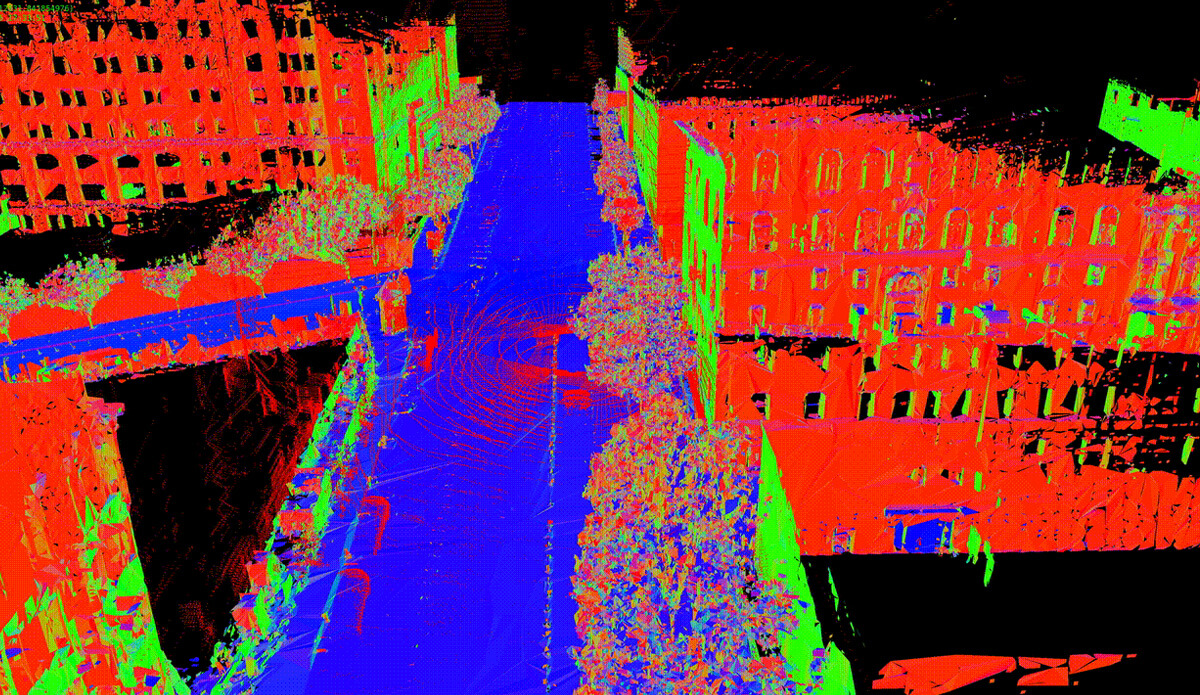

The Zoox vehicle uses a number of inputs, including lidars, cameras, accelerometers, gyroscopes, wheel speeds, and steering angles for localization, pinpointing its exact location and velocity up to 200 times a second.

A Zoox vehicle aligning lidar data to our 3D map to localize itself in downtown San Francisco.

“Localization is fundamental. Just like humans, Zoox vehicles need to know where they are in the world before they can safely and successfully navigate to their destination.” DEREK ADAMS, MANAGER, CLAMS SOFTWARE INFRASTRUCTURE

Autonomous vehicle mapping: always moving forward

This mapping process enables our entire fleet to react to an ever-changing city in real time. Because each vehicle compares what it sees to what should theoretically be there, it can recognize new conditions as they crop up. If it discovers something that’s not on the map, like a closed lane or a construction zone, it can autonomously plan a new route while alerting other vehicles and flagging the change to our CLAMS and ZRN teams.

The data handled by CLAMS and ZRN is mission-critical, and every other system in our autonomy stack relies on it. To a passenger, it’s invisible and effortless. To the Zoox vehicle, it’s a vivid, always-changing picture of the world it’s moving through.