SUPER SENSORS: HOW MOTIONAL’S AVS CAN “SEE” BETTER THAN A HUMAN DRIVER

Our eyes are amazing natural sensors that help our brains understand what's happening around us. They are capable of capturing images in a higher resolution than the best smartphone camera, toggling between different fields of depth, zooming in and out almost instantly. And they can stream those images to our brain instantly. This is how we're able to drive through a busy downtown area, safely navigating around vehicles, pedestrians, and other obstacles that might impede our path.

Creating driverless vehicles that operate at least as safe as human drivers requires giving the vehicles the ability to "see" their surroundings clearly and identify potential dangers quickly. Motional robotaxis have an array of high-tech sensors that allow the vehicle to see in ways that exceed what human drivers are capable of. It’s one reason why Motional vehicles have been able to log more than 2 million miles of autonomous driving without an at-fault accident.

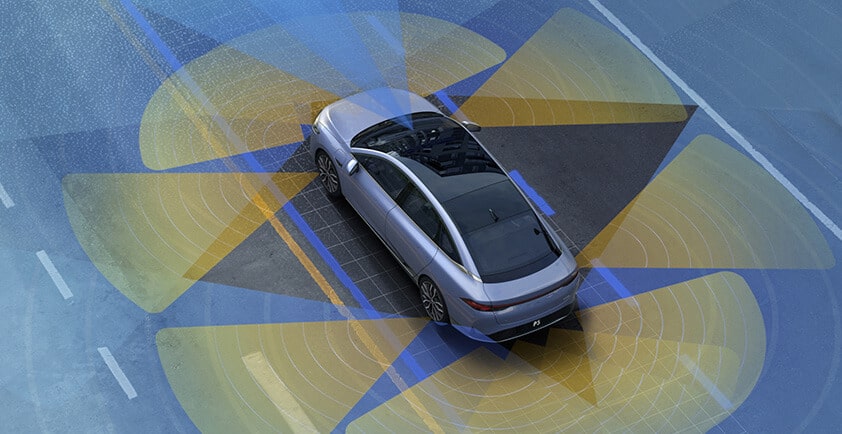

"Our AVs have a 360-degree field of view coverage, meaning we're looking in all directions, at all times, around the vehicle," said Michael Morelli, Fellow at Motional. "If something is happening around our vehicles, we can see it.”

The human eye can see in remarkable detail, particularly in what's known as our foveal vision, which allows us to pick up small details with the highest clarity. To put it in perspective, scientists estimate that when we use our fovea, which is packed with photoreceptors, it’s similar to viewing objects with a 15 megapixel camera. However, as objects move outside of our fovea and into our peripheral vision, they become increasingly blurry. Humans make up for this narrow range of high-focus by constantly looking around, scanning our entire field of vision, and having our brains create a composite picture of our larger surroundings.

This is why good human drivers don't just stare straight ahead; they quickly scan the horizon left, right, up, down, making sure they can identify anything that could threaten their safe path forward, or the safety of those around them, such as a pedestrian stepping off a curb or a vehicle entering an intersection. But even then, our field of vision isn’t complete, limited by how much we can turn our heads while still capturing what’s happening in front of us.

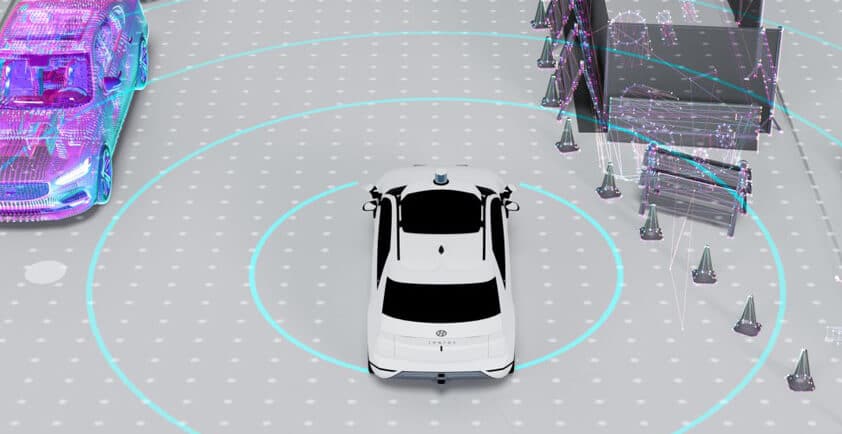

Motional's driverless vehicles feature more than 30 sensors consisting of cameras, radars, and lidars. They are optimally positioned to avoid blind spots. The sensors are also layered, with some scanning for threats down field, and others looking close to the vehicle. This means the vehicle can see what’s in front, what’s behind, and what’s beside it in full detail at all times.

"We have some wide-field cameras that see lots of objects and more narrow-field cameras looking in more detail at a smaller portion of the field of view, but they all overlap," Morelli said.

This constant flow of sensor data is synced up and fed into a powerful onboard computer that uses machine learning algorithms to steer and operate the vehicle safely.

All of the sensors combine to help the driverless vehicle understand its surroundings in ways human drivers can't. For example, human eyes, like cameras, are passive sensors that require light to function. Lidars and radars, however, are types of active sensors. Lidars detect objects by sending out pulses of infrared lightwaves multiple times every second. Radars send out electromagnetic radio waves. Neither sensor depends on light to function, meaning they are able to help vehicles "see" in the dark.

"At night, humans don't have active vision, we all rely on headlights and trust that they built the road system with gentle enough curbs, because you can only look at the white stripe," Morelli said. "We don't know if something is in the roadway outside of what we can see with headlights. That's the kind of situation where autonomous vehicles are going to be exceedingly good."

In addition, the diverse collection of sensors means the vehicle can see the same object with its cameras, radars, and lidars, giving it a multi-dimensional view.

"We're able to look at any object with more than one sensor at any time, and then use sensor fusion to reinforce our understanding of what's there," he said.

Lidars are also capable of clearly detecting objects more than 200 meters away - or two soccer fields laid end-to-end. Even for drivers with 20/20 vision, visual acuity degrades significantly at that distance.

“At 200-300 meters, we can see some things but they have to be pretty bright,” he said.

The next generation of driverless vehicles may have sensors that can detect objects up to 500 meters away, far beyond the capabilities of the human eye.

“The further out we can see, the further away we can detect and classify objects, the more comfortable our ride will be,” he said.

Human eyesight does have its advantages, however, such as with what’s known as dynamic range. This comes into play when we’re driving out of the bright sunshine and into a dark tunnel, or vice versa.

“Our eyes are able to adjust very quickly, faster than cameras,” he said.

Being able to replicate what the human eye does naturally, and place that technology on cars, has taken decades of research and scientific innovation. But that shouldn’t be surprising, said Morelli: after all it takes years for humans to learn how to use their naturally-occurring abilities to become skilled drivers.

“It takes an infant a long time to learn how to use all its sensors, many many years,” Morelli said. “Even teenagers, although they can do a lot of stuff, when in those cognitively demanding environments like driving at night, they need lots of practice.”