A DRIVERLESS FUTURE DEPENDS ON DATA

How to manage the massive data that autonomous vehicles generate

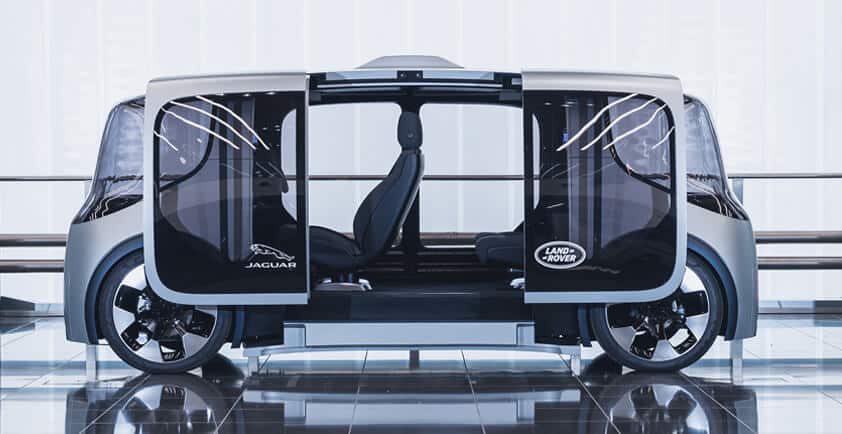

Autonomous cars have captured our imagination for quite some time – think of the Batmobile or Herbie, the iconic Volkswagen Beetle with a mind of its own. While being able to choose a vehicle’s personality like we do its color may still be in the distant future, self-driving cars are edging closer to reality. Volkswagen, Honda, Ford, Mazda and Hyundai are some of the manufacturers that demonstrated autonomous driving and connected service innovations at CES. Many of these companies, as well as other big names such as GM, Toyota, Daimler and others have claimed they will have true driverless cars on the road sometime between the end of this year and 2030.

While advances in shiny technologies like artificial intelligence (AI), 5G and the internet of things (IoT) are putting the race toward connected vehicles into high gear, the topic of data management often seems to be missing from the story. And, if Intel’s prediction is right – that an autonomous car will generate about four terabytes (TB) of data in an hour and a half of driving – then the practicality of how to manage all that data needs to be addressed sooner rather than later.

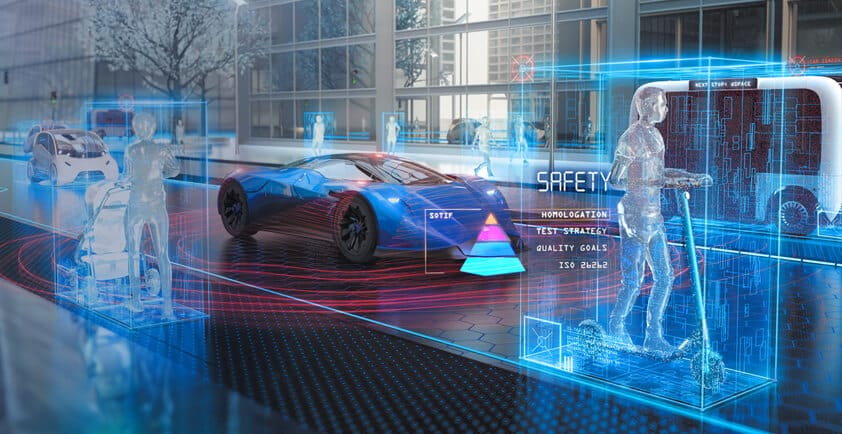

Advanced driver assistance systems are data hogs

Advanced driver assistance systems (ADAS) are an integral part of autonomous vehicle development. Relying on input from multiple data sources including sensors, cameras, computer vision and more, ADAS can either be passive (e.g. lane departure warning) or active (e.g. automatic emergency braking without driver intervention). To learn more about ADAS, register for the ScaleUp 360° Advanced Driver Assistance Systems Europe.

Regardless of which level a company is at with its ADAS research and development, dealing with data is a core challenge that should be on the map today. Current test drives are generating up to 20 TB of data a day and potentially up to 100 TB/day with more advanced sensor sets. Uploading this amount of data using conventional tools can range from a week to months for a single car. In addition, building and improving core AI models to analyze all that data requires high performance computing power at a location with high-speed, secure access to the preferred cloud provider, private data centers, data sources and data brokers.

To address these challenges, there are two key considerations businesses need to keep in mind for their IT infrastructures:

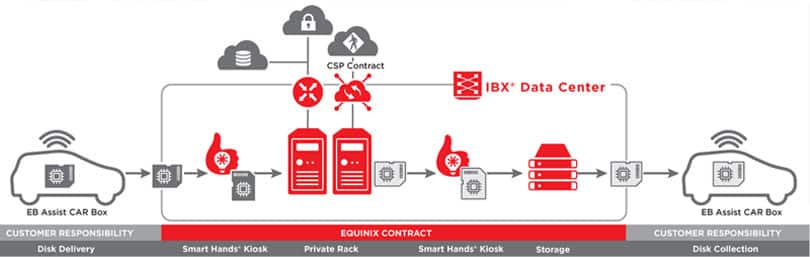

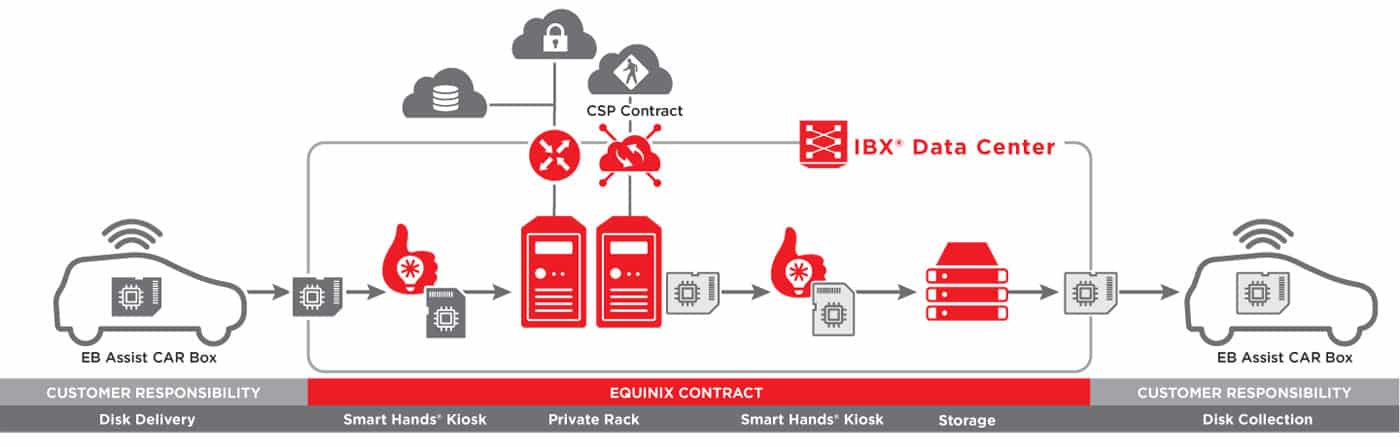

1. Massive data ingestion needs high-bandwidth, low latency connectivity: The biggest challenge for Elektrobit (EB), a global supplier of connected software for the automotive industry, was how to securely store, transfer and process the massive amounts of data generated daily by test cars. A "digital data garage" proof of concept (POC) was implemented in an Equinix Solution Validation Center® (SVC®) at the Frankfurt (FR4) Equinix International Business Exchange™ (IBX®) facility that tested out uploading data from 30 cars collecting ~19 TB a day to Microsoft Azure. The test used various connection speeds and methods ̶ public internet (1 Gbps), direct connect (1-10 Gbps) and Equinix Cloud Exchange Fabric™ (10 Gbps).

Excluding data validation, the upload rate to the cloud via ECX Fabric™ reduced the upload time from 85 days to just 12 hours versus going over the internet. In addition to faster upload and processing times, it sped up the development process by allowing exabytes of data to be better managed by collaborative teams that now find their workflows simplified. With ECX Fabric™ accessing the cloud at the edge, terabytes or more of data can be piped in from EB overnight, enabling test drive data to be processed and used more quickly by Elektrobit’s customers.

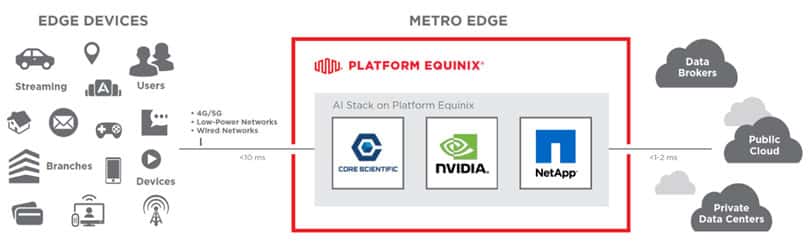

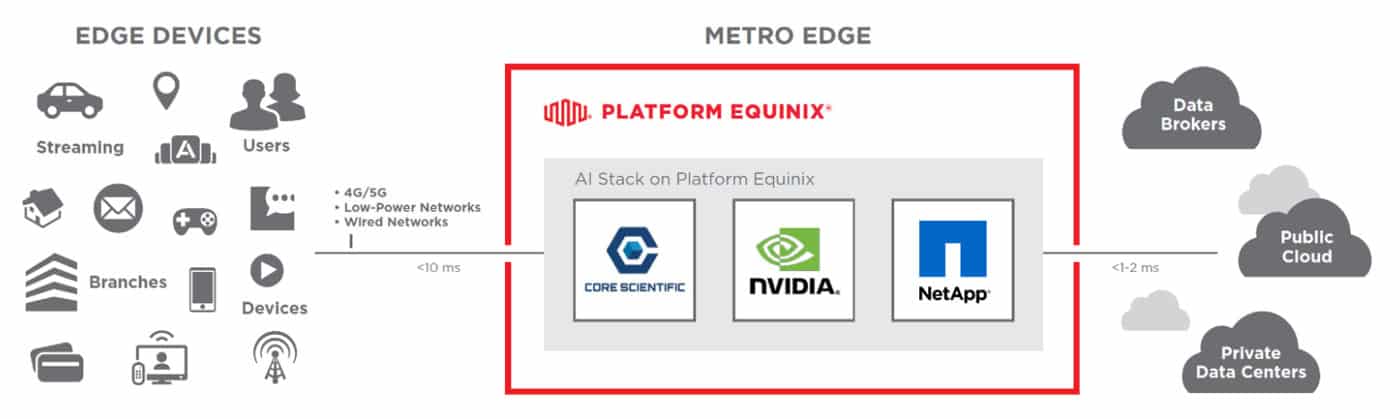

2.Successful AI model building requires big data + high performance compute: AI models are only as good as the data that’s used to build them. To prototype, test and refine AI models more rapidly, data scientists need access to vast sets of data coming from multiple sources including sensors, cameras and other edge sources, making proximity of datasets to compute resources essential. AI infrastructure on Platform Equinix® provides the best of both worlds – access to high performance compute resources with high-speed, secure interconnection to multiple clouds, private data centers and data brokers. With the AI as a Service platform offered in a test drive by Equinix, NVIDIA, NetApp and Core Scientific, businesses can accelerate their ADAS development with:

> Easy consumption of an AI as a Service for data scientists.

> High performance compute, network and storage technologies optimized for running all the major AI software frameworks.

> High-speed, secure interconnection to IT systems and data sources across public clouds, private data centers and edge locations.

Emerging technologies like IoT, 5G and AI are enabling a myriad of new use cases including autonomous cars, smart cities, augmented reality and more. But as the race to build and test prototypes accelerates, long-term implications for the supporting IT architectures may be overlooked. Five years from now, a self-driving car may very well collect 10-100x as much data that it collects today.

Getting past that hurdle will require a distributed, scalable IT infrastructure capable of supporting rapid data collection, ingestion and analysis. Global Private connectivity to dense ecosystems of providers and enterprises/partners also enables fast, secure exchange of data, insights and AI models to accelerate ADAS innovation across the industry.

Download the IDC Infobite to learn more about the opportunities and challenges ahead for the automotive industry.

Author - Petrina Steele, VP Business Development & Innovation EMEA