THE NEXT STEPS FOR OUR ROBOTAXI IN LAS VEGAS, FOSTER CITY, AND BEYOND

>> Zoox takes on a larger geofence, higher speeds, light rain, and nighttime driving.

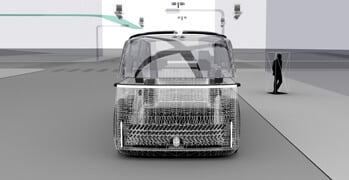

When we journeyed onto public roads in Foster City, CA, and Las Vegas, NV last year, it was a big step for us and the autonomous vehicle (AV) industry. It marked the first time in history that a purpose-built AV—with no manual controls—drove autonomously on open public roads with passengers. Since then, we’ve been hard at work in preparation for our commercial launch.

Leveling up

First off, we’ve expanded our Las Vegas geofence: the virtual boundary we operate our purpose-built vehicles in. This geofence spans approximately five miles from our Las Vegas headquarters to the south end of the Strip along multiple routes. It’s larger and more complicated, with three-lane roads, required lane changes, unprotected right turns onto high-speed roadways, and double-right and left-hand turn lanes. Driving in these larger areas exposes our robotaxis to the busiest conditions they’ve ever encountered and provides invaluable data and learnings as we continue to scale.

Secondly, we’ve expanded the autonomous driving capabilities of our robotaxi in Las Vegas and Foster City to include driving at speeds of up to 45 mph, in light rain, and at night. Let’s dive into each one by one.

Gaining speed

While our robotaxi can reach 75 mph, we started out driving routes with limits of 35 mph last year and are now driving routes with a higher limit of 45 mph. Driving autonomously at a higher speed increases difficulty in all conditions. We’ve set rigorous internal safety targets, and we did not begin driving these routes until we were able to quantify that we have met and consistently exceeded those targets.

Our perception team had to overcome the challenge of reliable long-range detections when making unprotected turns onto high-speed roads. And to navigate the new geofence, we had to tackle lane changes, which require the AI stack to simultaneously plan the trajectory in space and time.

Moment-by-moment, the vehicle can adjust its behavior accordingly because the vehicle’s Prediction and Planner systems are working together.

"A big advancement in our AI stack is that Planner and Prediction work hand in hand."

MARC WIMMERSHOFF, VP OF AUTONOMY SOFTWARE

Come rain or shine

Rain can be tricky for AVs as it could create a distraction. Our Lidar system, for example, may pick up reflections from droplets and puddles. So we had to make sure our vehicle’s Perception system was robust to such lidar distractors.

Our AI also applies what it has learned from our test fleet that’s driving autonomously in rainy Seattle, cross-correlating it with our rain data from Las Vegas and Foster City. By having data from multiple geofences, we’re set up to grow our capabilities even more.

Nightrider

Just like human eyes, our vehicle’s Perception system needs to adjust between daylight and nighttime, too. While radar and lidar function effectively in low-light conditions, it’s not as easy for cameras.

For nighttime driving, we needed to be able to detect unclear shapes in darkness. This requires training and refining our machine-learning models, based on data from both our test fleet and our robotaxis.

"There’s a ‘flywheel effect’ of collecting data, feeding it back into your models, and getting a better model with better precision and better recall."

MARC WIMMERSHOFF, VP OF AUTONOMY SOFTWARE

Viva Las Vegas

Deploying our robotaxi in a larger geofence is a big milestone for Zoox. Operating autonomously at higher speeds, with required lane changes, in light rain, and at night are key achievements too—they’re complex scenarios that are required to operate our service.

As always, safety is foundational to Zoox, so we’ll continue to be measured and thoughtful in our approach to commercialization. These critical updates bring us closer to safely and confidently offering Zoox to the public. We can’t wait for you to experience your first ride later this year!