SELF-DRIVING CARS NEED TO GIVE PASSENGERS A BETTER EXPERIENCE. HERE’S HOW.

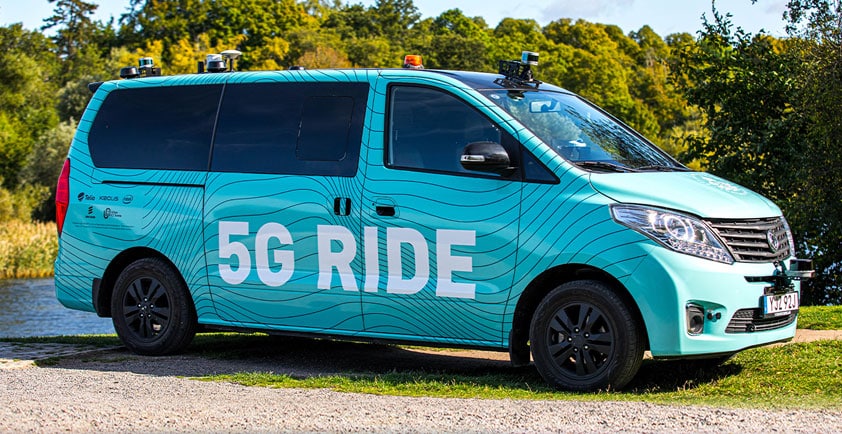

The adoption of new technology hinges on user experience. Self-driving cars are no different. Below, Ericsson tech hero Alvin Jude explores how edge services will be critical to improving the self-driving car passenger experience, for example by collecting nearby data and visualizing in 3D. Read on to learn more.

“He doesn’t even trust my driving, do you think he’ll trust a machine?” My friend was half-joking, but his father’s long ‘nooooooooo’ when we mentioned self-driving cars gave us a clue. I realized one crucial barrier to the uptake of connected vehicles: most people are anxious to enter one to begin with.

My friend’s dad isn’t alone in his sentiment. In a 2018 survey, 52 percent of Americans claimed they would never want to use a driverless car, and only 9 percent said they would use one as soon as possible. Older users are also far less willing to use one. This does not seem right. As I grow old, I want to benefit from autonomous vehicles, especially if I lose my mobility or ability to drive. I want self-driving taxis to take me to parks, connect me with my family, or take me to medical appointments.

So, why the hesitation? Why the long, emphatic ‘nooooooo’s?

I recall my mum teaching me how to drive. I remember her sitting in the front seat and slamming on an invisible brake pedal. I recall my passengers grabbing onto roof handles whenever they sensed the slightest of danger. I can’t really complain. I always feel a bit of anxiety when I’m in a taxi, or when I’m driven around by somebody whose driving styles don’t match mine. Most of all, I remember the many people who would tell me to ‘watch that bike,’ ‘pay attention to that pedestrian,’ and ‘look out for that red van.’ In most cases, I would have already noticed the hazard, but I still needed to say “yup, I see her” as calmly as possible with minimal annoyance.

Now, how could we do that with a computerised driver?

Improving the self-driving car experience

Our philosophy for creating good software is to build apps that people want to use, not ones that people have to use. This philosophy can also be applied to self-driving cars. How do we create self-driving cars that people want to get into, regardless of whether or not they need to? That’s easy. We simply need to design them with the passenger experience in mind.

In his book ‘The Design of Everyday Things’, Don Norman laid down a number of core UX design principles. One is the ability to to provide feedback. Let’s think about this in the context of self-driving cars: the car needs to give the passenger feedback on what actions it will take. It should also explain why.

The motivation here is simple. The developer in me who understands what goes on under the hood knows that the onboard computer has seen all the hazards, is calculating the best course of action in real-time, has my safety and comfort optimised, and is constantly working to ensure the safety of everyone around me. The passenger in me still wants to tell it to “look out for that red van.”

Constant feedback is the key to ensuring passenger experience, specifically one that that alleviates anxiety. It is the car telling the passengers ‘yes, I see that red van.’ So, how do we do that? Voice is an option of course, but that would quickly become annoying. Visualization, on the other hand, would work superbly.

Since the vehicle is already collecting a tremendous amount of sensor information, it should be possible to reconstruct this data. The key difference is that the sensors use machine vision and the data is intended for machine consumption, not human consumption. Through a series of design exercises, we identified the gaps between visions intended for machines against those intended for humans, backed by theory on human factors, our knowledge of sensors, and observation of current trends in intelligent vehicles.

Visualization of vehicle intent

Our hypothesis was that continuous, instant, on-demand communication between the vehicle and the passenger would alleviate anxiety in passengers, improving the passenger experience. We intended to provide this communication through visualisation only, allowing the passengers to glance at the screen any time they wanted. Voice and/or audio could be used but only when absolutely necessary, thereby reducing sensory overload which would only heighten anxiety.

The first thing to note is that people tend to reconstruct objects in their head. Take this cup for example:

Figure 1: Can you imagine what this cup looks like from the back?

You’ve probably never seen this exact cup before, and you can only see it from one angle. But you instantly know what it looks like from the back. Most people are also able to do mental rotation of objects despite only seeing it from one angle or through photographs. Our ability to fill in the blanks is why we see two large triangles here.

Figure 2: How many triangles do you see in this picture?

Did you notice that there are in fact no triangles at all? We fill in the blanks and draw connections, which allows us to imagine the back of the cup as well as see triangles when they don’t exist (if you’re interested in this, look up Gestalt Psychology). Machines don’t typically do that – because they don’t have to – but they can be trained to reconstruct images the same way people would.

Egocentric vs. allocentric

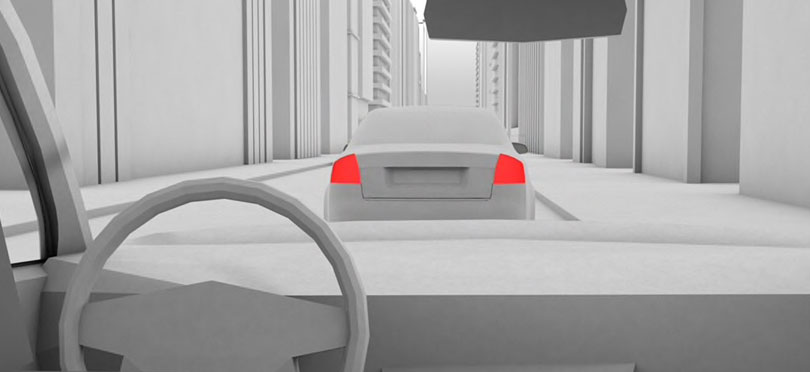

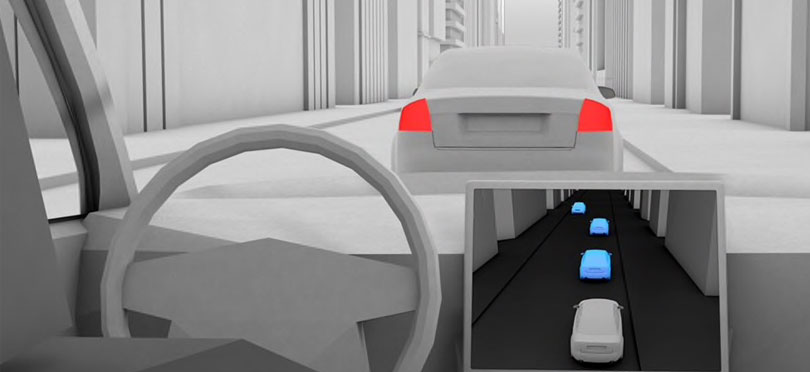

That brings us to the next part of our design: visualization. Simply put, there are two ways to view the world: egocentric or allocentric.

Egocentric is basically a ‘first person’ view, while allocentric is a ‘third person’ view. Allocentric views seem to be better in this case for a few reasons: it provides a wider view of the world, it allows a better understanding of the world, and it can show items occluded from the passenger, but not from the car (due to the position of sensors on the vehicle).

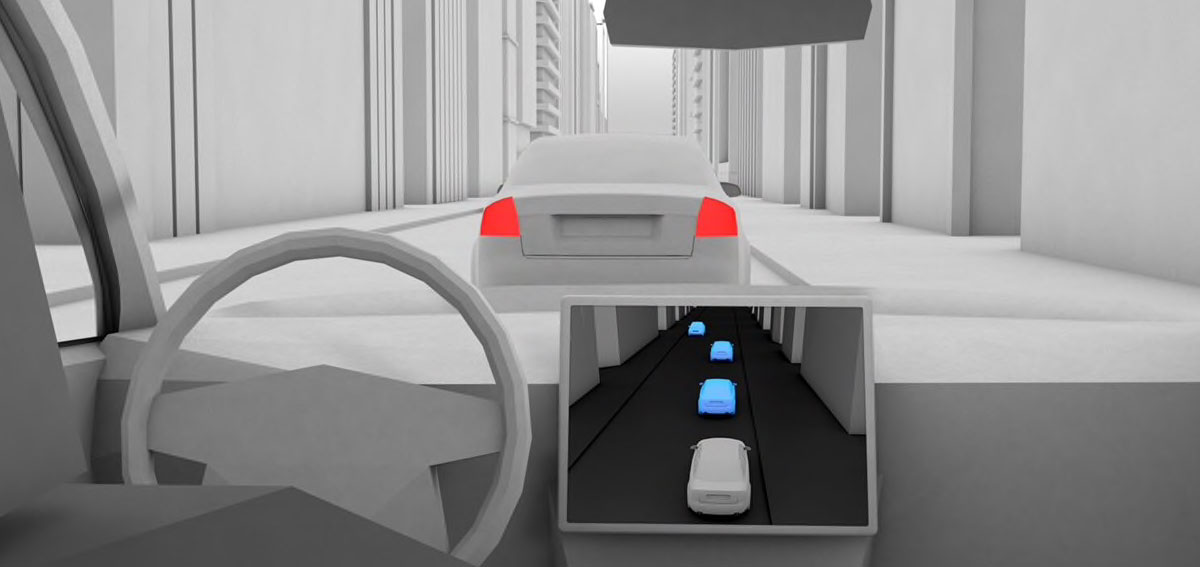

Figure 3: Egocentric view - here’s what passenger would see from the back seat.

Figure 4: Allocentric view of the same scene. Notice the oncoming vehicle which was previously occluded.

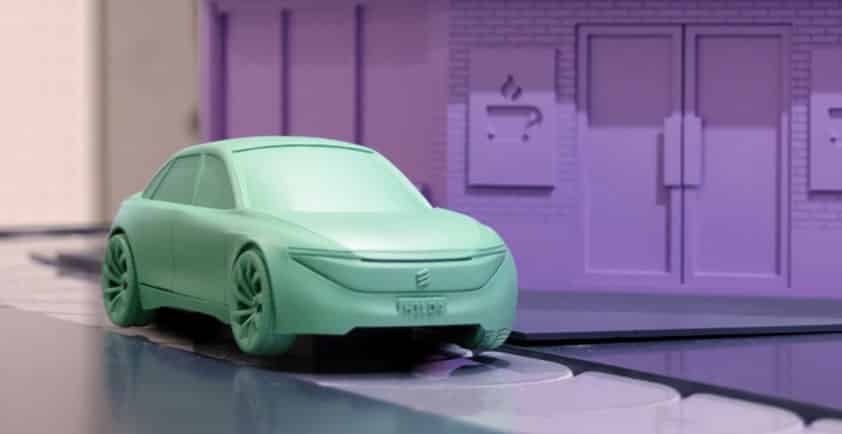

Now all we need to do is take this allocentric reconstructed view of the world, and place it within view of the user.

Figure 5: Now the passenger has an allocentric, reconstructed visualization of the world.

This is just the first step. There are many more questions that we need to ask. For example, do we need to provide photorealistic visualizations? Or would it suffice to show approximations, such as an icon to represent other cars? Do passengers need to know that the car ahead is a green 1995 Toyota Corolla? Or is it sufficient that they see it’s a green Sedan? Perhaps if everything were monochromatic then it would be easier to inform them of more pertinent information, such as the car’s planned path, and the hazards it sees. There is a balance to be established, and there are many more research questions and design choices that we look forward to investigating.

How we can overcome occlusion

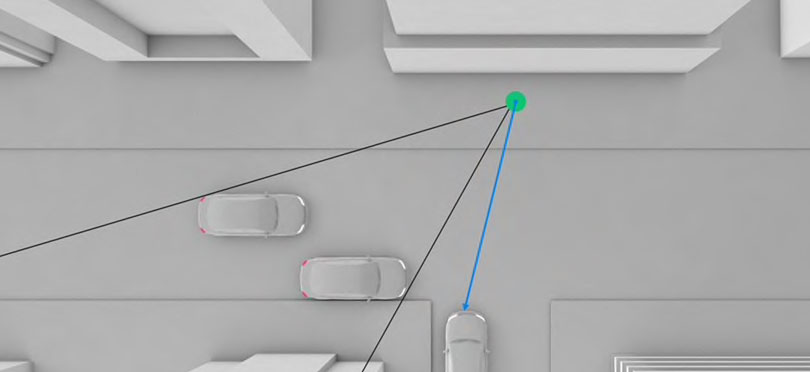

An important question we ask ourselves at Ericsson is how can we further improve this experience? For this, we need to fully understand the pain I face every morning as I drive out of my house. Every morning there will be a car parked close to my exit, blocking my onboard sensors from the moving vehicles behind it.

Figure 6: All the onboard sensors will not be able to capture that moving vehicle.

Every morning, I inch slowly forward until I’m certain I won’t be t-boned. Sometimes oncoming vehicles will see my bumper and provide me with the first (and usually only) annoyed honk of the day. This problem could be solved by putting mirrors on the road, but that won’t really help improve with the passenger experience of self-driving cars.

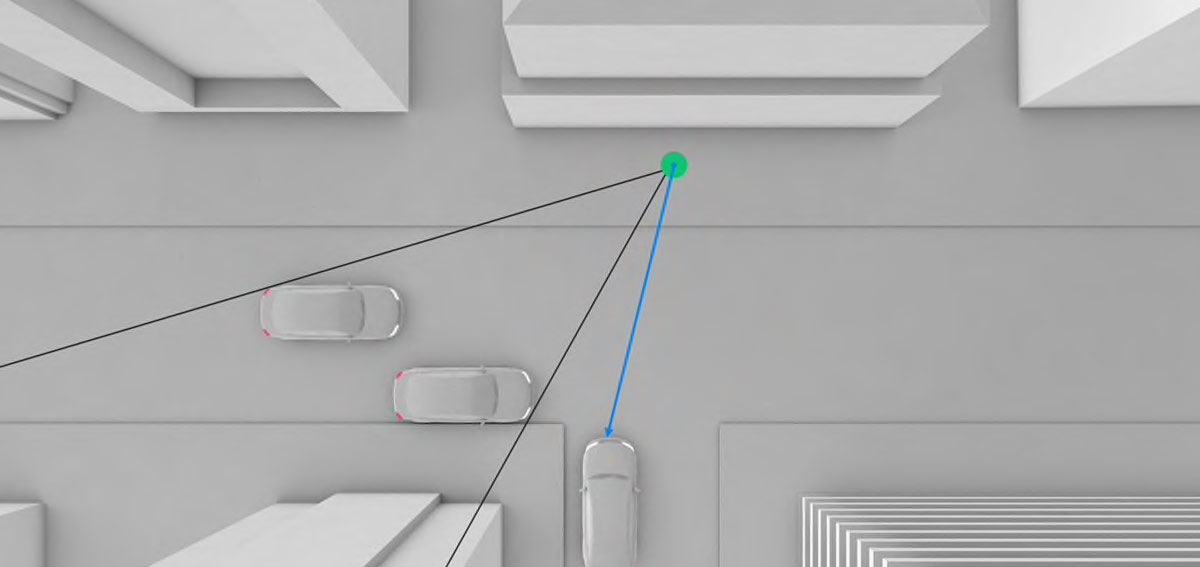

Figure 7: Road sensors could signal oncoming vehicles.

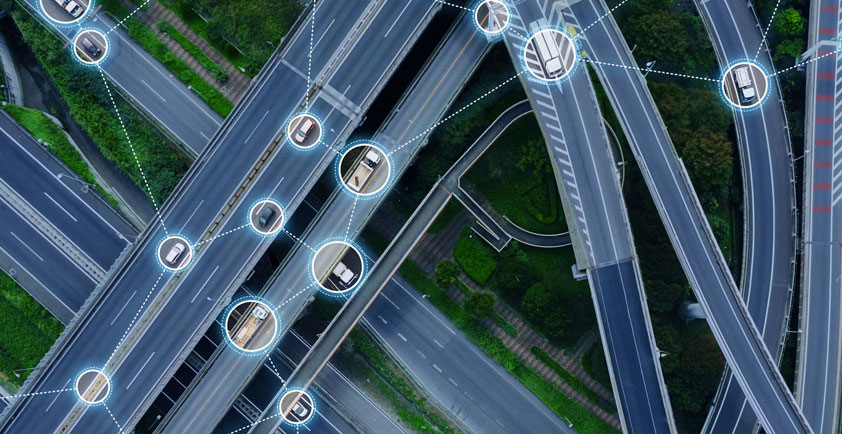

If we can tap into sensors, then we could fuse all this information together and show the passengers in self-driving cars the full picture. Vehicles and hazards that are occluded from the vehicle could now be…un-occluded. This is my vision of the world: one that is completely free of occlusion.

The journey ahead

There is, of course, a lot more work to be done here. Reconstructing the world in real-time is no simple task. Low-latency, high bandwidth connectivity between environment sensors and vehicles is essential. An edge cloud which collects data from multiple heterogeneous sensors, consolidates it into one coherent view of the world, then sends the relevant parts to those who want it is a key ingredient in improving the passenger experience of autonomous vehicles.

I have no doubt that an unocclusionator will be available by the time I am too old to drive. I’m working hard to make sure my friend’s dad will also benefit from it.

Author: Alvin Jude - Senior Researcher, Human-Computer Interaction - Ericsson Research