VISTEON - WHITE PAPER: CAMERA-BASED DRIVER MONITORING SYSTEM USING DEEP LEARNING

Driver Monitoring is emerging as an essential requirement for ADAS and autonomous driving. Camera-based driver monitoring systems (DMS) help in detecting driver drowsiness and distraction – thus playing a crucial role in ensuring driver safety. DMS solutions have traditionally been developed using computer vision and image processing approaches – and while this has contributed to key advancements in the field – fine tuning of parameters based on conditions such as lighting, positioning and gender is required.

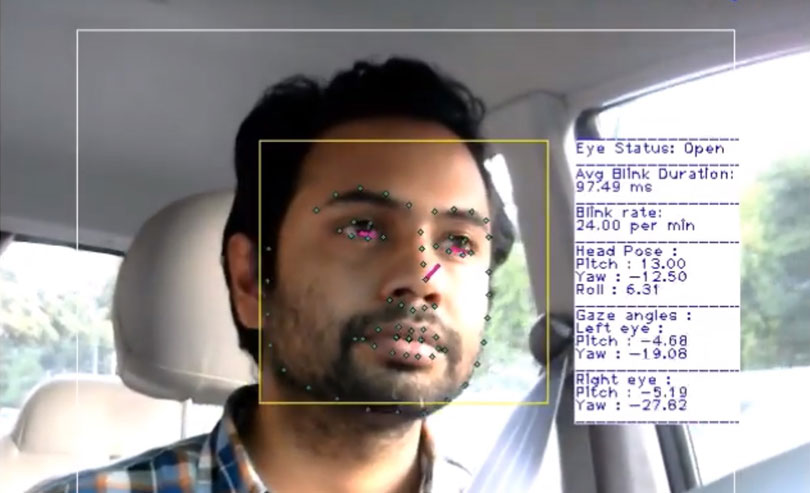

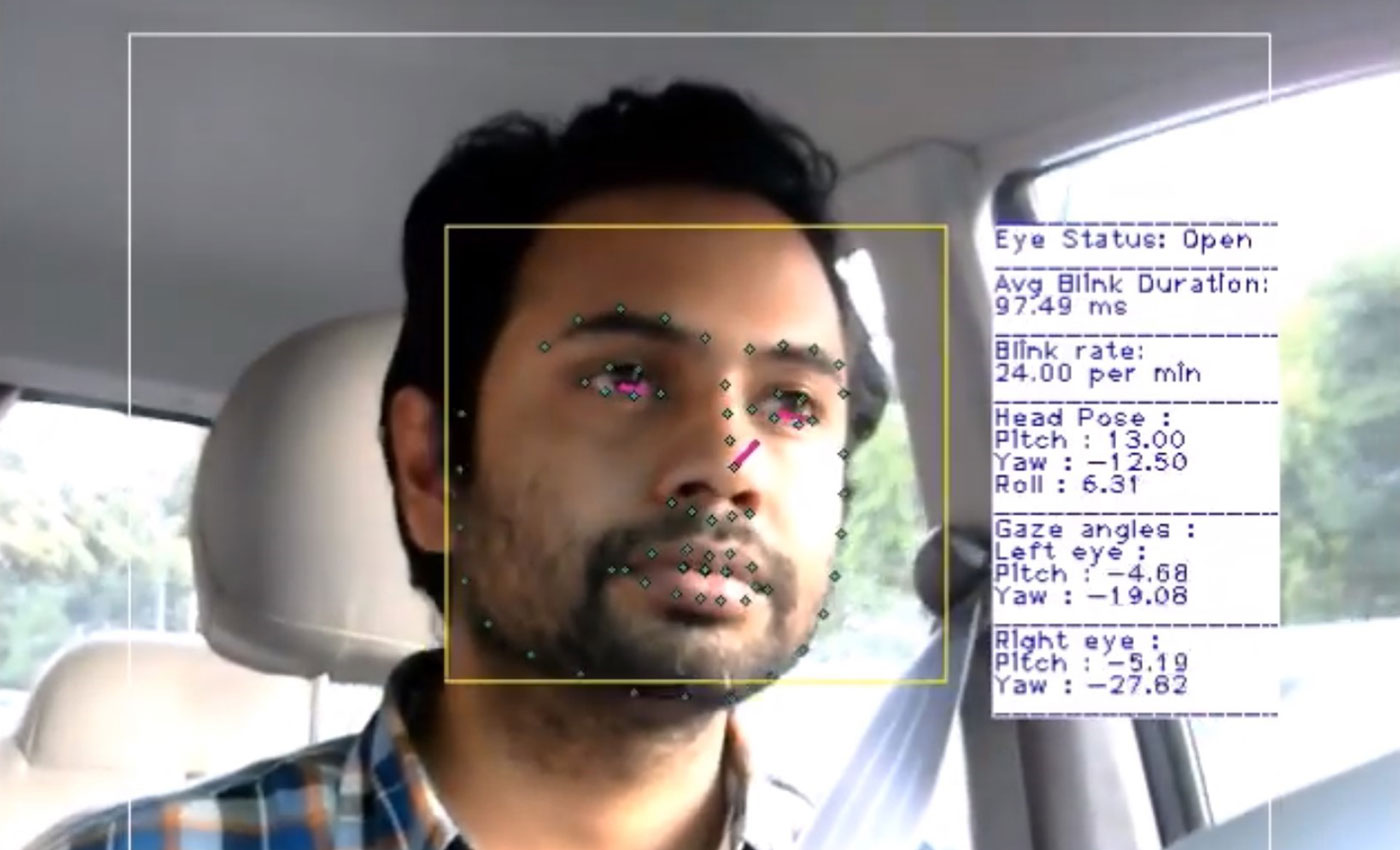

In a new white paper, a technical team from Visteon subsidiary, AllGo Systems propose a real-time, infrared camera-based DMS with features including head and eye tracking – as well as eye state analysis – monitoring blink rate, blink duration and when the eyes are open or closed, which can be used to implement driver safety protocols.

The system has been developed using deep learning which has made the solution more robust for a wider range of individuals, different genders and other elements that previously constricted the process. It has also been optimized to run on a host of embedded platforms without the need for graphics processing units (GPUs) or Cloud support during runtime - assisting in lowering power consumption and cost.

Treating face detection as an object detection and classification task, AllGo trained a deep neural network with face annotated images - building a face detector robust against various lighting changes, occlusions and expressions. For head pose estimation, the team developed a CNN-based head tracking system using a single camera which takes the detected face region of the image as an input and outputs 3D angles of the head as a result.

The deep learning-based network is a person-dependent, non-intrusive gaze estimator, trained to work on each of the driver’s eyes independently. It also takes head pose angles as input to compute the gaze vectors for each eye – these can later be combined using simple geometry to get the point of intersection of the two gaze vectors.

Any deep learning solution is computationally intensive, which calls for the use of GPUs or high-end CPUs. Visteon and AllGo’s model is optimized to run on a low-cost embedded platform.

Measures taken to enhance the performance range from picking the right network and model – ideally with the least complexity but with the desired levels of accuracy – to optimizing the model, using newer and better techniques of deep learning, such as replacing the convolutional layer with a depth-wise convolutional layer. The embedded platform is optimized by choosing the right deep learning tool.

Incorporating the driver safety modules described in the paper, the deep learning-based DMS includes cabin monitoring modules which integrate face recognition, emotion detection and activity detection – for example, registering when the driver is eating or accessing in-car displays.

This proposed solution, based on deep learning and run locally on any embedded platform, offers better quality of results compared to traditional approaches. The team expects a significant increase in the deployment of deep learning-based artificial intelligence in the coming years, influencing virtually every segment of automotive.

"With this solution, training happens offline using GPUs and CPUs - and can take anywhere between a couple of hours to a couple of days, depending on the amount of data used for the training, and the memory and speed of the machine it is running on. The quality of the solution is heavily dependent on the quality of the training with which the model is generated. Data is collected from people of different ethnicities, under different lighting conditions, with a range of expressions and various occlusions like glasses, scarves and hats. Each module will require a specialized way of collecting and annotating data." Nirmal Sancheti, Vice President, Engineering, AllGo