INSIDE AI: NVIDIA DRIVE ECOSYSTEM CREATES PIONEERING IN-CABIN FEATURES WITH NVIDIA DRIVE IX

>> Cerence, Smart Eye, Rightware and DSP Concepts develop intelligent convenience and safety capabilities for software-defined vehicles.

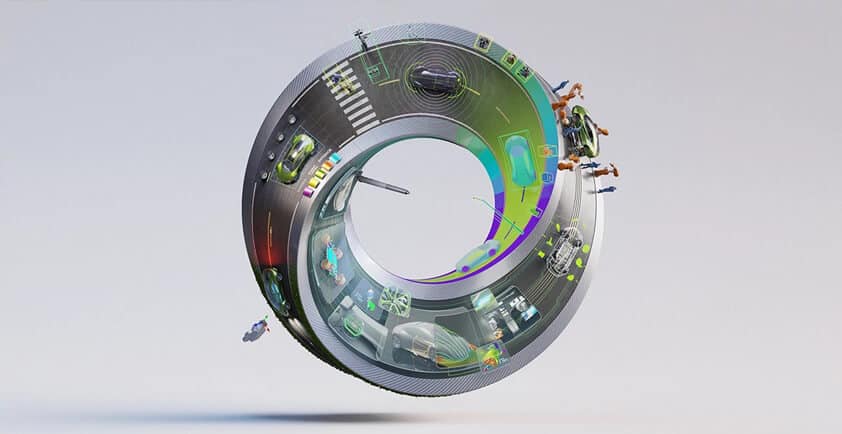

As personal transportation becomes electrified and automated, time in the vehicle has begun to resemble that of a living space rather than a mind-numbing commute.

Companies are creating innovative ways for drivers and passengers to make the most of this experience, using the flexibility and modularity of NVIDIA DRIVE IX. In-vehicle technology companies Cerence, Smart Eye, Rightware and DSP Concepts are now using the platform to deliver intelligent features for every vehicle occupant.

These partners are joining a diverse ecosystem of companies developing on DRIVE IX, including Soundhound, Jungo and VisionLabs, providing cutting-edge solutions for any in-vehicle need.

DRIVE IX provides an open software stack for cockpit solution providers to build and deploy features that will turn personal vehicles into interactive environments, enabling intelligent assistants, graphic user interfaces and immersive media and entertainment.

Intelligent Assistants

AI isn’t just transforming the way people drive, but also how they interact with cars.

Using speech, gestures and advanced graphical user interfaces, passengers can communicate with the vehicle through an AI assistant as naturally as they would with a human.

An intelligent assistant will help to operate vehicle functions more intuitively, warn passengers in critical situations and provide services such as giving local updates like the weather forecast, making reservations and phone calls, and managing calendars.

Conversational AI Interaction

Software partner Cerence is enabling AI-powered, voice-based interaction with the Cerence Assistant, its intelligent in-car assistant platform.

Cerence Assistant uses sensor data to serve drivers throughout their daily journeys, notifying them, for example, when fuel or battery levels are low and navigating to the nearest gas or charging station.

It features robust speech recognition, natural language understanding and text-to-speech capabilities, enhancing the driver experience.

Using DRIVE IX, it can empower both embedded and cloud-based natural language processing on the same architecture, ensuring drivers have access to important capabilities regardless of connectivity.

Cerence Assistant also supports major global markets and related languages, and is customizable for brand-specific speech recognition and personalization.

Gesture Recognition

In addition to speech, passengers can interact with AI assistants via gesture, which relies on interior sensing technologies.

Smart Eye is a global leader in AI-based driver monitoring and interior sensing solutions. Its production driver-monitoring system is already in 1 million vehicles on the roads around the world, and will be incorporated in the upcoming Polestar 3, set to be revealed in October.

Working with DRIVE IX, Smart Eye’s technology makes it possible to detect eye movements, facial expressions, body posture and gestures, bringing insight into people’s behavior, activities and mood.

Using NVIDIA GPU technology, Smart Eye has been able to speed up its cabin-monitoring system — which consists of 10 deep neural networks running in parallel — by more than 10x.

This interior sensing is critical for safety — ensuring driver attention is on the road when it should be and detecting left-behind children or pets — and it customizes and enhances the entire mobility experience for comfort, wellness and entertainment.

Graphical User Interface

AI assistants can communicate relevant information easily and clearly with high-resolution graphical interfaces. Using DRIVE IX, Rightware is creating a seamless visual experience across all cockpit and infotainment domains.

Rightware’s automotive human-machine interface tool Kanzi One helps designers bring amazing real-time 3D graphical user interfaces into the car and hides the underlying operating system and framework complexity. Automakers can completely customize the vehicle’s user interface with Kanzi One, providing a brand-specific signature UI.

Enhancing visual user interfaces by audio features is equally important for interacting with the vehicle. The Audio Weaver development platform from DSP Concepts can be integrated into the DRIVE IX advanced sound engine. It provides a sound design toolchain and audio framework to graphically design features.

Creators and sound artists can design a car- and brand-specific sound experience without the hassle of writing complex code for low-level audio features from scratch.

Designing in Simulation

With NVIDIA DRIVE Sim on Omniverse, developers can integrate, refine and test all these new features in the virtual world before implementing them in vehicles.

Interior sensing companies can build driver- or occupant-monitoring models in the cockpit using the DRIVE Replicator synthetic-data generation tool on DRIVE Sim. Partners providing content for vehicle displays can first develop on a rich, simulated screen configuration.

Access to this virtual vehicle platform can significantly accelerate end-to-end development of intelligent in-vehicle technology.

By combining the flexibility of DRIVE IX with leading in-cabin solutions providers, spending time in the car can become a luxury rather than a chore.

Author - Katie Burke - NVIDIA’s Automotive Content Marketing Manager