COMING IN 2020: MAJOR DATASET UPDATE TO NUSCENES BY APTIV

As we look back on 2019, we are proud to have led a groundbreaking year for self-driving research and industry collaboration. In March, Aptiv released nuScenes, the industry’s first large-scale public dataset to provide information from a comprehensive autonomous vehicle (AV) sensor suite. In publishing an open-source AV dataset of this caliber, we knew that we were solving for a gap in our industry, which has historically limited making data available for research purposes. Our objective in releasing nuScenes by Aptiv was to enable the future of safe mobility through more robust industry research, data transparency and public trust.

The reception far and wide exceeded our expectations. Within the first months of releasing nuScenes, we saw thousands of users and hundreds of top academic institutions download and use the dataset. Not long after, we were excited to see other industry leaders -- including Lyft, Waymo, Hesai, Argo, and Zoox -- follow suit with their own datasets. At the same time we began receiving requests from notable self-driving developers asking to license nuScenes, so that they could use the data to improve on their own autonomous driving systems. It’s this type of groundbreaking collaboration and information sharing that supports our industry’s ‘safety first’ mission.

Today, we are proud to announce the next big step for Aptiv’s open-source data initiative. In the first half of 2020 our team will release two major updates to nuScenes: nuScenes-lidarseg and nuScenes-images. Commercial licensing will be available for both datasets.

nuScenes-lidarseg

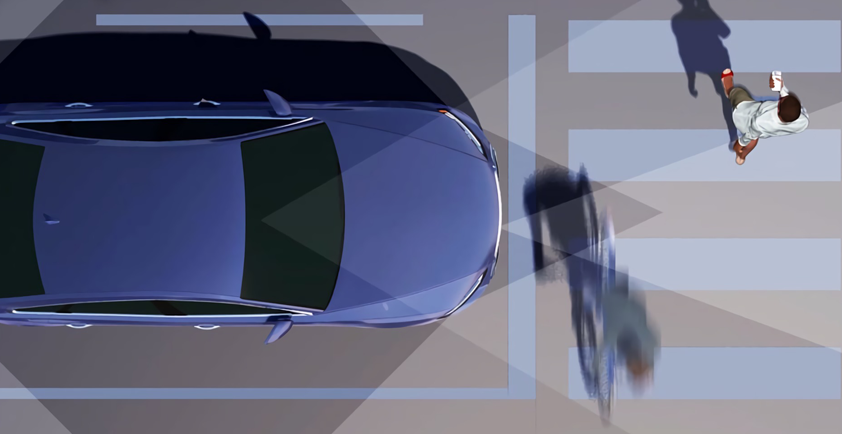

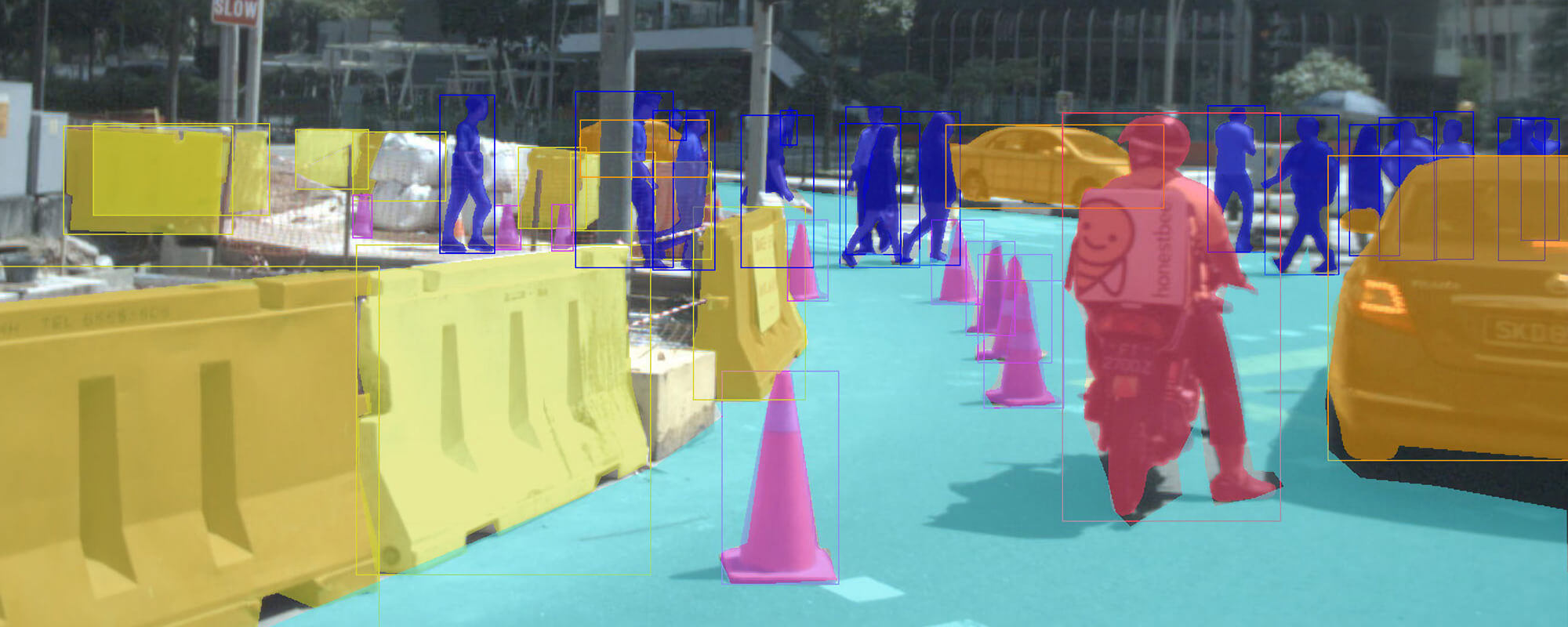

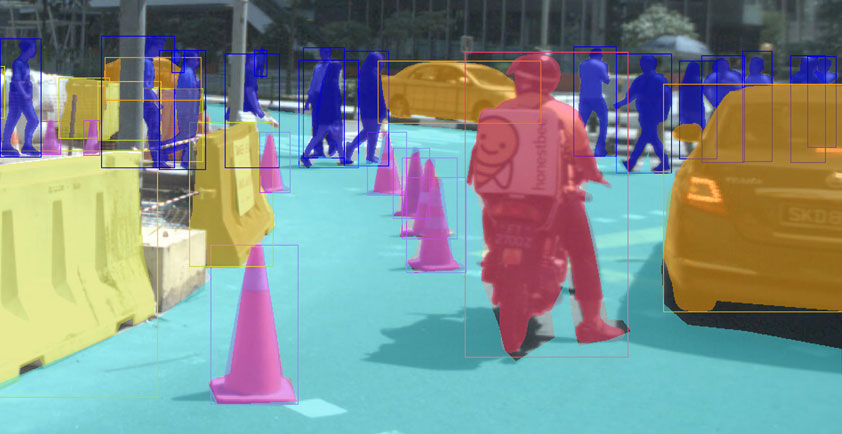

In the original nuScenes release, cuboids (also known as bounding boxes) are used to represent 3D object detection. While this is a useful representation in many cases, cuboids lack the ability to capture fine shape details of articulated objects. As self-driving technology continues to advance, vehicles need to pick up on as much detailed information of the outside world as possible. For example, if a pedestrian or cyclist is using arm signals to communicate with other road users, the vehicle needs to recognize this and respond correctly.

To achieve such levels of granularity, we released nuScenes-lidarseg. This dataset contains annotations for every single lidar point in the 40,000 keyframes of the nuScenes dataset with a semantic label -- an astonishing 1,400,000,000 lidar points annotated with one of 38 labels.

This is a major step forward for nuScenes, Aptiv, and our industry’s open-source initiative, as it allows researchers to study and quantify novel problems such as lidar point cloud segmentation, foreground extraction and sensor calibration using semantics.

nuScenes-images

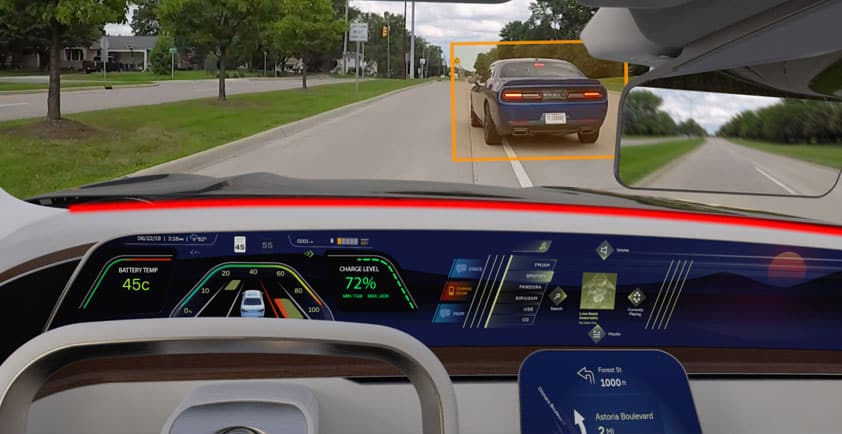

Leading scene understanding algorithms on common object classes, like cars, is one thing -- but it’s a well-known problem that these algorithms do not perform well on rare classes (tricycles or emergency vehicles, for example). Furthermore, Level 5 self-driving cars will require vehicles to operate in varying environments, including rain, snow and at night.

To collect a more sophisticated dataset that could successfully respond to edge cases or unfavorable driving conditions, we deployed data-mining algorithms on a large volume of collected data to select interesting images.

Our ambition is that the resulting nuScenes-images dataset will allow self-driving cars to operate safely in challenging and unpredictable scenarios.

nuScenes-images will be comprised of 100,000 images annotated with over 800,000 2D bounding boxes and instance segmentation masks for objects like cars, pedestrians and bikes; and 2D segmentation masks for background classes such as drivable surface. The taxonomy is compatible with the rest of nuScenes enabling a wide range of research across multiple sensor modalities.

The new data content will be released in the first half of 2020.

Author: Oscar Beijbom - Machine Learning Director, Aptiv

APTIV LEADS AUTONOMOUS VEHICLE SAFETY, RELEASES INDUSTRY'S LARGEST PUBLIC DATASET FOR AUTONOMOUS DRIVING

Aptiv PLC, a global technology company enabling the future of mobility, announced today the full release of nuScenes by Aptiv, an open-source autonomous vehicle (AV) dataset. As the first company to share safety data of this caliber with the public, Aptiv is solving for a gap in the AV industry, which has historically limited open-sourcing data for research purposes. Through sharing critical safety data in nuScenes with the public, Aptiv aims to broadly support research into computer vision and autonomous driving by AV innovators and academic researchers to further advance the mobility industry.

As the first large-scale public dataset to provide information from a comprehensive AV sensor suite, nuScenes by Aptiv is organized into 1,000 “scenes,” collected from Boston and Singapore, and is representative of some of the most complex driving scenarios in each urban environment. The nuScenes dataset is composed of 1.4 million images, 390K LiDAR sweeps, and 1.4M 3D human annotated bounding boxes, representing the largest multimodal 3D AV dataset released to date.

Providing public data of this kind not only offers academic researchers and industry experts access to carefully curated safety standards, it enables robust progress and innovation in the industry. To date, over 1,000 users and over 200 academic institutions have registered to access the nuScenes dataset.

“At Aptiv, we believe that we make progress as an industry by sharing—especially when it comes to safety,” said Karl Iagnemma, president of Aptiv Autonomous Mobility. “Our team thought carefully about the components of our data that we could open to the public in order to enable safer, smarter systems across the entire autonomous vehicle space. We appreciate the importance of transparency and building trust in AVs, and we look forward to sharing nuScenes by Aptiv, information that has traditionally been kept confidential with academic communities, cities, and the public at-large.”

To explore the dataset, visit nuScenes.org.