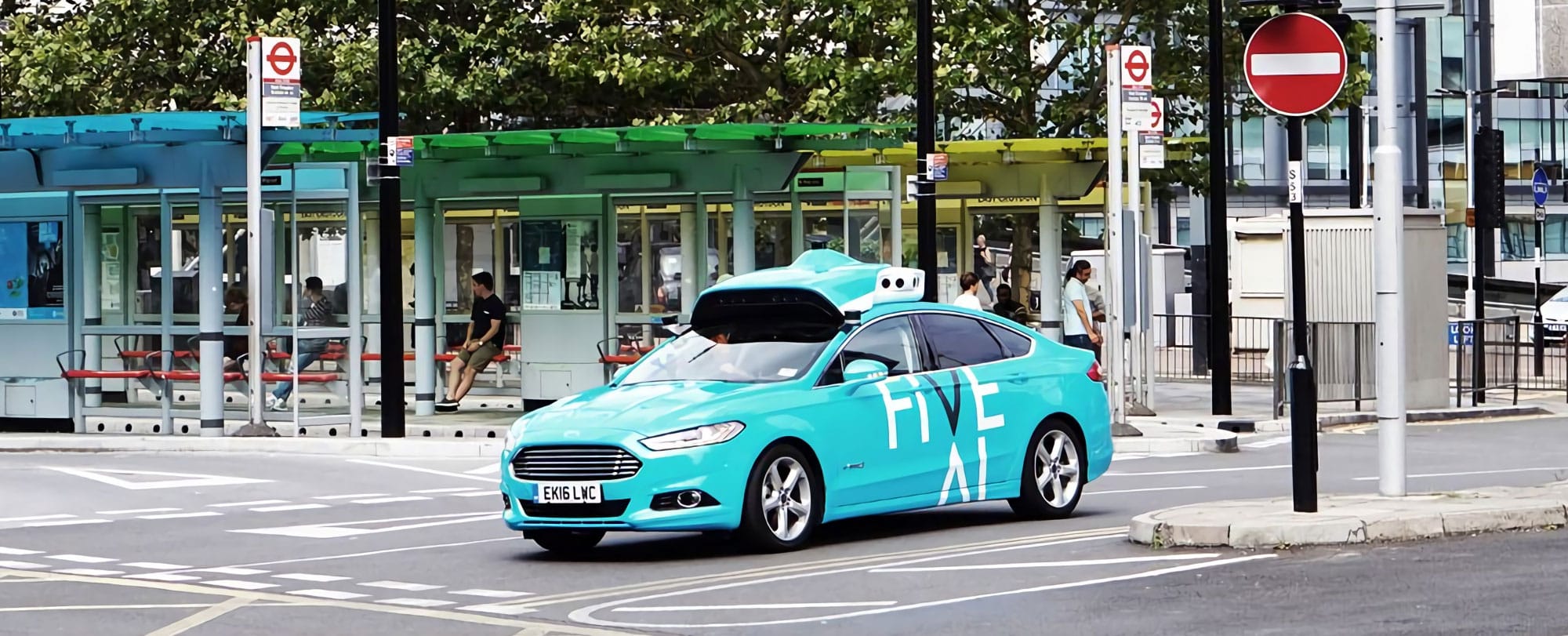

HOW FIVEAI IS DEVELOPING SAFE SELF-DRIVING TECHNOLOGY FOR EUROPE

We’re building the software that will make shared self-driving mobility a reality in Europe. And that reality is going to trigger the most amazing and positive change in the way we live.

But self-driving systems are mighty complex — and the real world they must interact with is infinitely so — which makes the problem both super interesting and super high value to solve. We like those problems.

We’ve assembled some of the brightest minds in AI, computer vision, prediction and robotics. They are working together here at FiveAI to make sure we’ve thought deeply about every piece of the puzzle and how we fit it together. Our system is already performing incredibly well, and that capability gives us a real edge in several areas across the stack.

Yet there’s a fundamental issue that hasn’t been solved by anyone in the space. How do we find where these systems fail, and what can we do to assure their safety? We were drawn to that challenge.

We got to thinking, where else have we seen these problems? What tools and techniques have we used in the past? And can we deploy a safe system for Europe’s tangled roads without it costing us literally billions? Is there a capital-efficient answer?

We have some surprising and encouraging answers to all those questions. They borrow from two different but related worlds that we know well from our experience as a team of co-founders: the chip industry and the wireless industry.

Learning from the chip industry

You may have seen our self-driving technology being tested on the streets of London, but that’s just part of the picture. There’s a much bigger program you can’t see behind the scenes and that involves simulation.

Large-scale simulation became the secret to finding bugs and testing systems in both the chip and wireless worlds as systems became ever more complex, and bugs ever harder to find.

There’s a lot to go wrong as transmitted radio waves interact with the real world and at the receiver get sampled and turned back into digits. Vast amounts of algorithmic processing are needed to correct errors and extract signal from noise, meaning the radio chips have to deliver ultra-reliable high performance and the digital chips must perform billions of complex math operations every second without making mistakes.

We’ve found the analogies and are applying the techniques used to develop screaming-edge wireless chips — abstraction, extraction, simulation, verification and validation — to bringing safe driverless technology to Europe.

When we do that, we get some huge paybacks:

> we verify our system to a high human bar for safety

> we find defects in our software components fast and correct them

> we massively cut the cost of developing a level 4 self-driving system (no expectation of any driver in the vehicle)

> we deploy our technology with confidence on public roads, with no need to retreat to campuses, warehouses or airfields…

Setting a high bar for safety

Of course, there’s no room for one-in-a-million bugs when it comes to a self-driving system.

Everything we do at FiveAI is driven by safety. We won’t put our vehicles on public roads unless they are at least as safe as human driving, and that’s a very high bar given the current state of the art in the underlying sciences we are all using and the many confounders there are in the world.

Meeting that safety target requires a rigorous, structured approach. Ours, learnt at the cutting edge of the wireless chip industry, is not just the best way to go about it, it’s probably the only way.

Complexity as standard

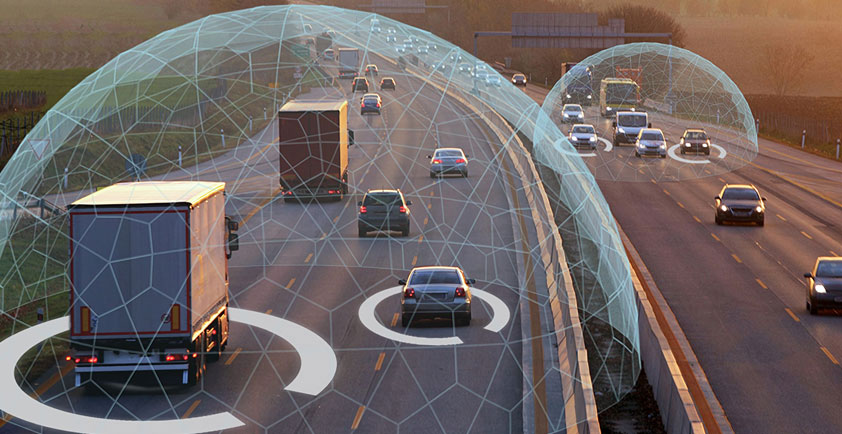

Any self-driving system is a complex assembly of cross-dependent and interacting software and hardware components, each prone to limitations or error. Several of the components use neural networks for object detection, type classification, action prediction and other critical tasks.

That system needs to operate safely in the slice of the world as we define it — our Operational Design Domain (ODD). And this is the first time, really, that neural networks have been applied to a safety-critical ODD. And that ODD itself — the city streets of Europe — holds infinite possibilities, with variables including road topologies, users, appearances, lighting, weather, behaviours, seasons, velocities, randomness and deliberate actions.

If this is making you feel nervous, then that’s a good response.

In computer science, we refer to the fundamental problem as state space explosion. As the number of variables in an overall system increases, the size of the system ‘state space’ (the combination of states across all variables) grows exponentially.

The outcome? Our performance in the ODD also becomes exponentially harder to model, and it becomes ever more difficult and expensive to hunt down potential defects that could lead to unsafe behaviors.

Now here’s the good news — we’ve seen similar complexity before. We know that it can be tackled with the right combination of methodologies, techniques and tools.

Two parallels stand out. The first is how we used channel models to simplify and maintain the statistical accuracy of wireless propagation in increasingly complex real worlds. That made the development of wireless systems feasible and predictable. The second is how the Electronic Design Automation (EDA) industry developed tools that allowed us to search for bugs and fix them in huge complex chip models which were close to infinite in state space.

It turns out we can apply both techniques, in different ways, to develop and verify self-driving technology. It’s very cool.

1. Using channel models to extract and abstract real world complexity: Just like the ODD for our self-driving technology, the world in which mobile phones have to perform is also highly varied.

What happens between transmitter and receiver is that the relatively clean radio wave being transmitted travels through space and time to be picked up by a receiver. And the energy and waveform gets distorted by what’s between the two, the propagation channel. Most of the wireless algorithms running in a receiver are concentrated on undoing the dispersion of energy in space and time, and extracting the signal from the noise in the channel.

Things get even more complicated when you start moving as Doppler effects shift the frequency of that messy signal. You can start to see how the channels vary in complexity by just thinking about the difference between a clear line of sight transmission between two hilltops and compare that with driving through a city with multiple skyscrapers, cars, trucks and people, each creating reflections, refractions and dispersions of signal.

To develop and test any wireless system, understanding and modelling the channel accurately is a vital step. You could try to model the physics, topology and properties of every single channel from first principles, but it would take a very long time and would require huge amounts of computation. Plus it would still be wrong in some way and there would be an infinite number of these to model!

So in wireless, you just wouldn’t do that. Instead, channel types are characterised in the real world and statistical models are built of those channel behaviors. All sorts of channels can be characterised and modeled, an essential piece of physical layer simulation for the development of wireless systems. And they have two important attributes — they are statistically representative, and they are computed inexpensively in simulation.

Perception systems for driverless vehicles look a lot like wireless propagation channels. Like channels, they can also be characterised with statistical accuracy, and they can be driven hard in simulation at low computational expense. Exactly how we use this idea in simulating the performance of these perception systems in typical ODDs is for another day, but the key message is that we can learn from how wireless physical layer simulation has made a very real impact on the speed of simulation, its cost and its accuracy.

2. Using simulation to find the needle in a haystack: We can also learn from the techniques developed by the chip industry to reduce the occurrence of critical bugs in production chips.

In the early days of the silicon chip, design was based on estimation and assumption. But once chips reached a certain level of complexity, it became more difficult to guarantee prototypes would work as expected.

To address this, EDA tools were developed to ensure that circuit design no longer had to rely on trial and error, dramatically reducing the cost and improving the speed of chip development. When the microprocessor arrived in the 1980s, more advanced EDA tools were needed to deal with the increased complexity. Amongst them were logic simulators, which led to huge advances in the success and speed of development of chip designs.

A watershed moment occurred in 1994, when Intel recalled its Pentium processors due to an incomplete lookup table in its floating point unit. It affected the fifth decimal place onwards in calculation results — and even then only one in nine million times — but it still resulted in a $475 million loss for the company, absolutely huge at the time. Exhaustive logic simulation of the component containing the bug was computationally infeasible — it would have taken hundreds of years to test all possible states.

Companies just couldn’t afford to risk such bugs going unfound, and this gave rise to new tools that could describe chip functionality in abstracted, but precise, behaviour models that allowed for fast automated analysis and simulation. Additional tools were developed to describe verification test sequences and drive them manually. This led to random test generation techniques and later directed random test generation.

What became important was that bug searching could now be targeted in specific places in the high dimensional space, and test coverage could be accurately measured — for example lines of code exercised or state space explored, known as coverage-based verification. In parallel, techniques were also developed to prove mathematically that some specific, important properties of complex systems are correct under all conditions, in a discipline known as formal verification.

These important techniques and tools can now be applied to exponentially complex ODDs, like European city streets.

In a self-driving system, no stone can be left unturned

Although the Pentium bug was a serious issue for Intel at the time, the impact was relatively low — maybe the odd incorrect calculation in a spreadsheet. In the self-driving space, the systems we’re developing are many times more complex, and the stakes are astronomically high. Even if the ‘bugs’ we’re looking for are as unlikely as a Pentium error, the result could be people getting hurt.

Meeting our safety target means rigorously hunting down the defects in our system that, in specific real-world circumstances, could lead to unsafe behaviours.

That requires a meticulous approach. Ours, inspired by the rigour and techniques of the wireless chip industry, will help us solve the self-driving safety problem both quickly and capital-efficiently.

So the revolution in our cities might be closer than you think. And that has to be good news, right?

Author: Stan Boland - co-Founder and Chief Executive Officer at FiveAI.

Visit FiveAI